In this chapter we shall learn about

4.1 What are Asymptotic Notations [AN]?

4.2 List of order of growth of computing time.

4.3 List of points that will affect the running time.

4.4 Why we ignore constants while calculating the complexity of algorithms?

4.5 Types of Asymptotic Notations.

4.1 What are Asymptotic Notations [AN]?

AN are mathematical functions that are used to calculate running time and also the rate of growth of an algorithm.

In the previous chapters we saw on how to analyze space and time complexity. With the help of previous learnings, we shall see how to calculate asymptotic notations.

Running time refers to the time taken for the computer to run all the statements in an algorithm.

Rate of growth refers to how the algorithm will behave when a large amount of input is given.

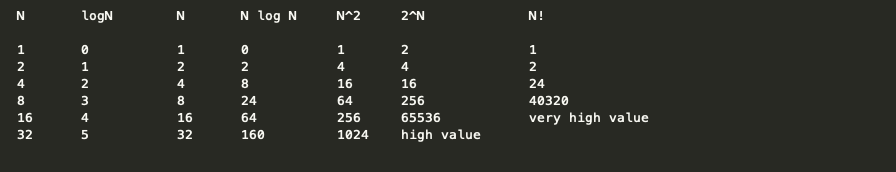

4.2 Below are some of the order of growth of computing time:

From the above table we can see the order of growth for a logarithmic algorithm is lesser than that of an exponential one. Hence if we have 2 algorithmic solutions, then we should go for the one that consumes less time.

logN: The running time is logarithmic. Algorithms like binary search.

N: The running time is linear. Algorithms like linear search.

NlogN: The algorithm like quick sort and merge sort.

N^2: The running time is quadratic. They usually will be having 2 loops. Algorithms like: Bubble sort, selection sort.

2^n: The running time is exponential.

4.3 When we run a program, below are the list of points that will effect the running time:

1. Speed of computer

2. Compiler Used

3. Programming Language

4. Algorithm of choice

5. Types of input and output

6. Size of input and output

As we don’t have control over first 3 points, we ignore them. We concentrate on next 3 points.

4.4 Why we ignore constants while calculating the complexity of algorithms?

This can be answered in 2 steps:

1. When we have a time complexity of “O (n^2 + 3)”, we ignore the constant “3”, and find the total time complexity as “O(n^2)”. This is because, we always want to know how the algorithm will perform for a larger value of “n”. For example, if the value of “n” is “10000”, then the constant “3” will be negligible. Hence we ignore it.

2. When we have a time complexity of “O(3n^2)”, we ignore the constant “3” and final time complexity will be “O(n^2)”. This is because, different computer architecture will be having different ways to handle the constant “3”. A faster computer will be able to store the constant in the register and is able to access it faster. A slower computer will hold it in memory hence is slower. As we are interested no the algorithm performs not on how the hardware performs, we ignore that constant.

4.5 Types of Asymptotic Notations:

Big O: We use this notation to calculate the worst case and is called as tight upper bound.

Big Omega: We use this notation to calculate the lower bound.

Bit Theta: We use this notation to calculate the average case.

Let us understand Best, average and worst case with the help of an example:

Consider that we are searching for a key in list of 10 items. We search one by one, a linear search.

Here Best-case will be, the key present at the first item itself.

Average case is, the key present somewhere in the middle of the 10 items.

Worst case is the key present at the last item.