In this tutorial, we shall learn about LRU cache and why it is important in System Design.

LRU is used for cache eviction. Cache eviction is a process where stored cache will be released when it reaches a certain limit. There are many algorithms used for cache eviction like LRU – Least Recently Used, FIFO – First In First Out, LIFO – Last In First Out, TLRU – Time Aware Least Recent Used. Among those LRU is the more used one.

During cache eviction, which item to be removed is very important. You cannot remove the most recently used item, you cannot remove the most used item that is used frequently.

LRU stands for Least Recently Used. This policy states that if your cache limit is reached while removing the cache removes the least used item.

How to implement it?

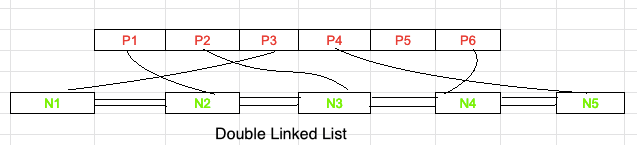

LRU is implemented by Doubly Linked List (DLL) and Hashmap.

So in our example, we have 6 DLL nodes and 6 values in a HashMap. DLL is used to store the cache value. Whenever there is a new cache it will be stored at the front, by this way the least used will be at the back.

While inserting a new value, we shall first check the DLL and if there is a value matching to the new value, then we move that node in the beginning. Else the new value will be inserted in the beginning.

But why do we need HashMap?

For searching the value in DLL it will take O(n) time. Hence to reduce the time complexity we use HashMap. Hence by using HashMap, we can search in constant time O(1).

So when the cache is full, we remove the last node of DLL and move the new item at the start of the linked list.